|

||||

|

|

|||

| In the second of two articles on color space and image control, technical experts assess some of the problems of — and potential solutions for — the hybrid film/digital workflow. |

|

|||

Jump to: “The Color-Space Conundrum, Part 1”

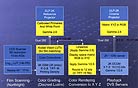

In January 1848, while overseeing the construction of a sawmill on a bank of the American River just outside Sacramento, foreman James Marshall happened upon a pea-sized gold nugget in a ditch. That moment set the California Gold Rush in motion; hundreds of thousands of people headed west to stake their claims to the precious metal. Soon enough, encampments sprouted and grew into mining towns. At the time, however, California had not yet attained statehood and functioned in a political vacuum. Squatters pervaded the land, and the prospectors, many of whom were squatters themselves, defended their territories with vigor. Frontier justice reigned, and trees were frequently decorated with nooses. In 1850, California officially became the 31st state, and the new government established at the end of 1849 took shape with laws that began to bring a sense of order to the land. History is cyclical, and digital imaging for motion pictures is experiencing a “gold rush” of its own. In the quest for bits rather than nuggets, the fortune seekers (and squatters) include a variety of competing entities: the philosophically conflicted studios, beholden to the bottom line yet striving for the best imaging elements; the manufacturers, who naturally are beholden not only to the bottom line, but also to market share; and the postproduction facilities and theater owners, who would prefer not to invest heavily in new formats and equipment that become outmoded upon installation. When smelted together, these camps’ ideologies do not form a utopian imaging environment. As a result, cinematographers, the artists responsible for turning ideas and words into big-screen visuals, must endure — for now — a piecemeal workflow that frequently is compromised, inefficient and expensive. In short, the process can produce bad images, despite the wealth of image-control tools and techniques that are now available. Philosopher George Santayana (1863-1952) wrote, “Those who cannot remember the past are doomed to repeat it.” Digital/high-definition television is already a mess of seemingly irreversible missteps. So far, digital cinema is not as much of a muddle — at least not yet. The frontier of digital motion imaging is like pre-1850 California: wide open and lawless. Enter the ASC Technology Committee. The goal of this august body is to formulate a series of “best-practice recommendations” for working in a hybrid film/digital environment — recommendations that will allow room for innovations to come. So far, the committee has worked closely with the Digital Cinema Initiatives (DCI) studio consortium in creating the Standard Evaluation Material, or StEM, for testing digital-projection technologies. The ASC is not proclaiming standards; that function is the province of the Society of Motion Picture and Television Engineers (SMPTE) and the Academy of Motion Picture Arts and Sciences (AMPAS). Just because a technology becomes an official standard doesn’t mean it is the best choice (see television for countless examples). Likewise, just because a manufacturer introduces a new product does not automatically make it the de facto best choice for serving filmmakers’ needs. The Technology Committee was formed by ASC cinematographers, its associate members and industry professionals in an effort to marshal this burgeoning field of digital motion imaging; the group’s true function is to serve as an advisory council and provide leadership within the current vacuum of ad hoc technology implementation. The graphics industry was in a similar state in the early 1990s. The Internet and computer networks were taking hold, and traditional film proofs were giving way to file sharing. However, with the variety of software, monitors and printers available — none of which communicated with each other — that gorgeous spokesmodel in your print ad might look great on the ad agency’s computer, but not so pretty in the actual pages of a magazine; if the printer shifted the blacks, you could end up with a hazy image. In 1993, to promote its ColorSync color-management system, Apple Computer formed the ColorSync Consortium with six other manufacturers. A year later, Apple, though remaining an active participant, handed the responsibility of cross-platform color profiling to the rest of the group, which then changed its name to the International Color Consortium, or ICC. Today, all hardware and software support ICC color profiles, and while not perfect, the system ensures that displays and printers are calibrated, and that shared files remain as true to the creators’ intentions as possible. Maintaining image integrity in a hybrid workflow — in other words, preserving the intended look — is the digital nirvana that cinematographers seek. As discussed in the first part of this article (“The Color-Space Conundrum,” AC Jan. ’05), the human visual system is subject to a number of variables, all of which ensure that none of us perceives the same image in exactly the same way. Film and its photochemical processes have their own tendencies. Over time and through technical development, the closed-loop system of film became manageable and consistent, with repeatable results. However, the introduction of digital bits has thrown the traditional methods of image control into flux: no two facilities are alike, no two processes are alike, and no two workflows are alike (see diagram a and diagram b). As a result of this situation, managing a hybrid film/digital workflow is similar to creating a computer workstation from the motherboard up — choosing various brands of graphics, capture, output and ethernet cards, installing memory and hard drives, upgrading the BIOS, picking out software, and selecting multiple monitors for display. Murphy’s Law of electronics guarantees that all things will not function as a perfect, cohesive whole upon initial startup; hence, tech-support operators in India await your call. Of course, despite the numerous faults inherent to today’s hybrid cinema workflows, the digital environment offers far greater potential for image control than the photochemical realm. It’s no small fact that of the five feature films nominated for 2004 ASC Awards (The Aviator, A Very Long Engagement, The Passion of the Christ, Collateral and Ray), all went through a digital-intermediate (DI) process. The DI process was born out of a rather recent marriage between visual effects and motion-picture film scanner- and telecine-based color grading. Of course, digital imaging began impacting the motion-picture industry a long time ago. While at Information International, Inc. (Triple-I), John Whitney Jr. and Gary Demos (who now chairs the ASC Technology Committee’s Advanced Imaging subcommittee) created special computer imaging effects for the science-fiction thriller Westworld (1973) and its sequel, Futureworld (1976). The duo subsequently left to form Digital Productions, the backbone of which was a couch-sized Cray XM-P supercomputer that cost $6.5 million. With that enormous hunk of electronics (and an additional, newer supercomputer that the company later acquired), Whitney and Demos also produced high-resolution, computer-generated outerspace sequences for the 1984 feature The Last Starfighter. The substantial computer-generated imagery (CGI) in that film was impressive and owed a debt of gratitude to a groundbreaking predecessor: Tron. That 1982 film, to which Triple-I contributed the solar sailer and the villainous Sark’s ship, featured the first significant CGI in a motion picture — 15 minutes worth — and showed studios that digitally created images were a viable option for motion pictures. During that era, computers and their encompassing “digital” aspects became the basis of experiments within the usually time-consuming realm of optical printing. Over 17 years, Barry Nolan and Frank Van Der Veer (of Van Der Veer Photo) built a hybrid electronic printer that, in 1979, composited six two-element scenes in the campy sci-fi classic Flash Gordon. Using both analog video and digital signals, the printer output a color frame in 9 seconds at 3,300 lines of resolution. If optical printing seemed time-consuming, the new methods weren’t exactly lightning-fast, either, and the look couldn’t yet compete with the traditional methods. In 1989, Eastman Kodak began research and development on the Electronic Intermediate System. The project involved several stages: assessing the closed-loop film chain; developing CCD-based scanning technology with Industrial Light & Magic; and, finally, constructing a laser-based recording technology and investigating the software file formats that were available at the time. The following year, Kodak focused on the color space into which film would be scanned. The project’s leaders determined that if footage were encoded in linear bits, upwards of 12-16 bits would be necessary to cover film’s dynamic range. Few file formats of the day could function at that high a bit rate. Logarithmic bit encoding was a better match for film’s print density, and Kodak found that 10-bit log could do a decent job (more on this later). The TIFF file format could handle 10-bit log, but was a bit too “flexible” for imaging purposes (meaning there was more room for confusion and error). Taking all of this into account, Kodak proposed a new format: the 10-bit log Cineon file format. The resulting Cineon system — comprising a fast 2K scanner capable of 4K (which was too slow and expensive to work in at the time), the Kodak Lightning laser recorder and the manageable Cineon file format — caused a radical shift in the visual-effects industry. In just a few short years, the traditional, labor-intensive optical died out. Though Kodak exited the scanner/recorder market in 1997, the Cineon file format is still used today. Recently, Kodak received an Academy Sci-Tech Award for another component of the system, the Cineon Digital Film Workstation. In the early 1990s, the arrival of digital tape formats was a boon to telecine. Multiple passes for color-correction and chroma-keying could be done with little or no degradation from generation to generation. The first format, D-1, worked in ITU-R BT.601(Rec 601) 4:2:2 component video with 8-bit color sampling. Rec 601 is standard definition as defined by the ITU, or International Telecommunication Union. (This body’s predecessor was the International Radio Consultative Committee, or CCIR.) 4:2:2 chroma describes the ratio of sampling frequencies used to digitize the luminance and color-difference components in component video’s color space of YCbCr, the digital derivative of YUV. (YPbPr is component’s analog derivative.) Thus, for every four samples of Y, there are two sub-samples each of Cb and Cr. |

|

|||

|

<< previous || next >> |

||||

|

|

|

|

|

|