A Clear Look at the Issue of Resolution

Steve Yedlin, ASC offers an intriguing demonstration on how capture formats, pixel counts and postproduction techniques affect image quality.

Steve Yedlin, ASC offers an intriguing demonstration on how capture formats, pixel counts and postproduction techniques affect image quality and why simply counting Ks is not a solution when selecting a camera.

While the “K Wars” continue to dominate many filmmaker and studio-level conversations about image quality and what camera system is “best” for a given project, cinematographer Steve Yedlin, ASC — known for his work in such films as Brick, Looper, Carrie and the upcoming Star Wars: The Last Jedi — believes much of this discussion has been based more on advertising-influenced superstition than on observable evidence. His solution? Create an exhaustive, methodical, empirical presentation that would not only address the many issues swirling about this debate, providing isolated-variable comparisons to demonstrate how he came to his perspectives on these issues.

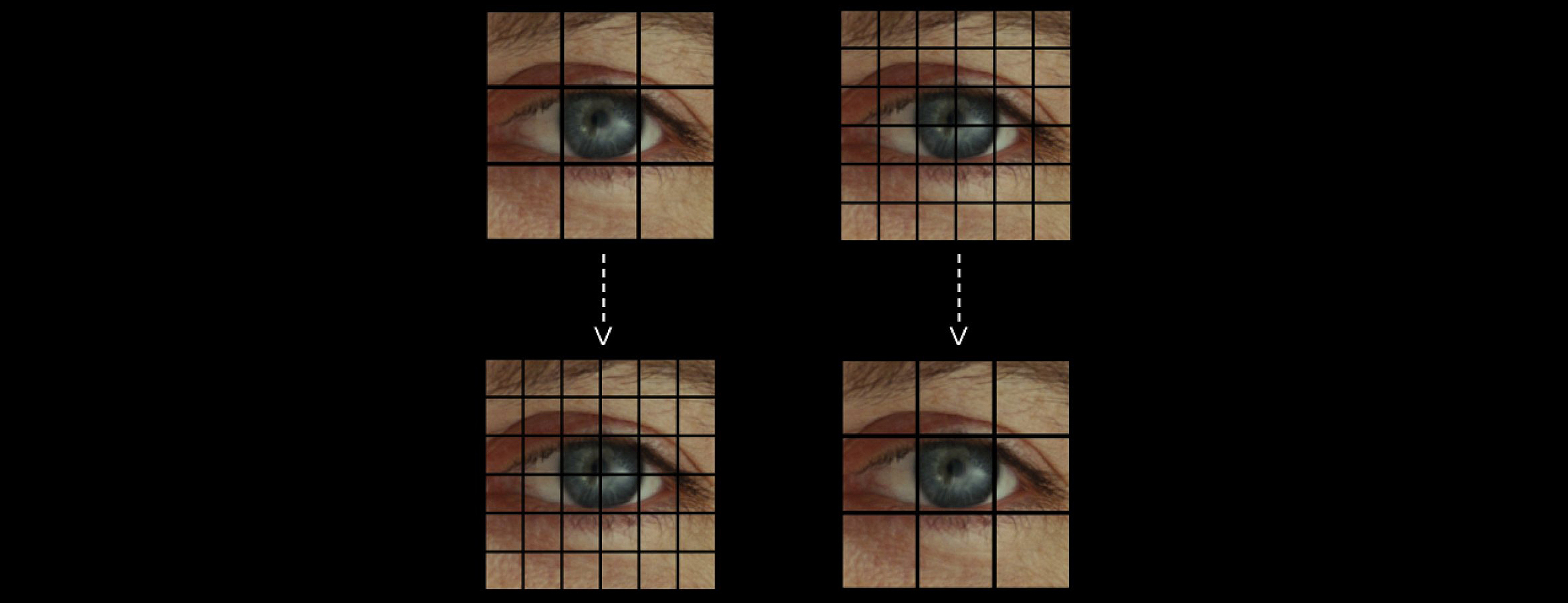

Yedlin’s provocative two-part primer, entitled On Acquisition and Pipeline For High Resolution Exhibition, largely consists of footage shot with six different camera systems, which is not only compared side-by-side, but pixel-by-pixel, with some startling results.

The comparison footage used in the presentation was shot in 2016 using six different camera systems: Arri Alexa 65, Arri Alexa XT, Arri 435 (shooting 4-perf Super 35, which was scanned at 6K with an ArriScanner), Red Weapon 6K, Sony F55 and an Imax MSM3 (shooting 15-perf 65mm, scanned at 11K with Imagica).

Yedlin mastered the project in uncompressed 4K and its primary release format is theatrical DCP, but it’s presented here in somewhat compromised form to allow for wider distribution

Check out the demo here.

American Cinematographer: What experiences or observations led you to create this presentation?

Steve Yedlin, ASC: The beginning of it was just having the same discussions over and over again, countering some really ingrained presuppositions that were commonly and strongly believed but not based on observations or experience but just on advertising. I was spending a lot of time on these conversations and they commonly ended with someone saying, “Well, you have your opinion and I have mine,” even though one of those opinions was based on observable evidence and the other was based on presuppositions. So I figured that if I was spending that much time on this, then maybe I should really spend the time creating something a little more definitive that can be my answer to the question.

How did that inspire the methodology demonstrated here? Was there any trial and error that led to the final approach?

This was all stuff that I had already observed and techniques I’d already used myself when I want to understand something. It’s about controlling variables and looking at the claim that is being made in a scientific way. All too often, a lot of things are changing when supposedly just one this is changing. For instance — and I’ve seen this many times — someone doing some kind of a resolution demonstration, often by someone who is going to profit from the outcome of that demonstration, and they show you two images on a screen and say “This one is 4K and this one is 2K.” And that’s it. No other information is given about how those images were created and nobody asks any questions. Well, have they both been mastered in 4K? Or are we comparing what it’s like to use 2K as a source for a 4K master? Is one thing 2K all the way through and the other 4K all the way through? If we’re comparing them back-to-back, is this being done on a 4K projector? Doesn’t that mean the 2K is being scaled to 4K? How is that being done, as there are different scaling algorithms which all affect the image differently? I’ve seen multiple situations where filmmakers or studio decision makers are shown something that’s meant to be a comparison and they are being shown this not by a technology expert from their own company but by a vendor who stands to gain by whatever decision is made based on the demo they are giving. That’s not really a fair comparison situation. So these decisions are not only being made with entrenched presuppositions about what makes an image look the way it does, but these false comparisons that are only nominally scientific and actually more of a marketing manipulation.

What feedback have you received from your peers who have watched this?

Pure excitement. [Laughs.] And not only from cinematographers who have seen it, but visual effects and post house people. When it comes to technical people, this is all stuff that they have known for a long time. There’s actually no new information here, but they’re not the decision makers, and the decision makers are less prone to listening to them than they are to people who use vague wine-tasting terms to describe image quality. So people are excited because this gives them a tool that they can use to start a real discussion about image quality. Again, this is stuff they already know, but it’s being presented in a clear, observable way, and I speak the language that the decision makers understand. Directors of photography that I’ve shown it to tend to be excited for a different reason, which is that these are things that they knew on a gut level, but they maybe didn't have the language or the science to back up what they observed and felt. I’ve talked to so many cinematographers who are frustrated because they can't shoot with the camera they want to use or use a certain pipeline they want to use because of rigid presuppositions or mandates from studios, producers or directors. Some cinematographers don’t have the technical background to counter these things, just a vague sense that it’s wrong yet lack the language or the evidence they need to back up their own beliefs and push back.

So essentially you have created a tool that anyone can use to educate themselves and really engage in this discussion.

That’s it, basically. There’s an aspect to it, however, that challenges all of us in regard to this deep-rooted paradigm that we’ve all been conditioned to see the world through, which is that a camera brand results in this final look of the image. So someone might say, “This camera has these skin tones” or talk about how many megapixels it has and how that results in resolution. But that’s just a self-fulfilling prophecy, a feedback loop, because a camera is really a colorimeter — it’s an array of millions of colorimeters that take 24 readings per second. The idea that the resulting data has to be shown on a screen in only one specific way and that if you want to see the image in a different way you have to use a different camera just doesn’t make sense. It’s like saying an architect is going to create a different house if he takes all his surveying measurements with a different brand of ruler. The camera is just collecting information; how you display that information later not enforced by the camera brand. But it’s been so ingrained in us that the machine that takes that measurement decides what to do with the data. We’re so conditioned to believe this that we don’t even look at a lot of the stuff that’s down the road in the image pipeline and can be far more instrumental in the final image quality. But because of the feedback loop, we’ve tended to believe that cameras do have different inherent looks. And we make decisions based on that false premise.

Both parts of your video have a very clear way of presenting the information. How did you come up with the strategy you used?

It’s just the way I do these kinds of things, so the methodology was the easy part. But I would say the single most important section comes about five minutes into the second part, when we compare a 2K source for a 4K master to a 6K source. That’s the perfect example of what I’m talking about in terms of controlling variables because the variables are controlled in the absolute, ultimate way possible. People might compare 6K to 2K and it’s a different camera even, so now you have different noise, different photosites — everything’s different — whereas what I was doing was using literally the same image for the comparison. Not only the same camera, but the same frame, so you don’t even have frame-to-frame variables impacting the comparison. So the idea was, what if I only have 2K of this 6K image — what if I have to choke it all the way down to this. What does that image look like in comparison to the 6K original after it is scaled up to 4K? And then we see how very little the resolution actually matters; it’s almost indistinguishable. And from an audience perspective, it’s no big difference in terms of their perceptual experience. What you can see, however, is that while you get almost no difference from the resolution change, you do get a difference from the scaling algorithms used to go from 6K to 4K and 2K to 4K. That does have a subtle perceptible effect, and much more of an effect than the difference in megapixels. That’s all about trying to isolate and control variables so there can be a fair comparison and decisions can be made meaningfully. So my methodology was nothing new. What took the most work was creating the language for the presentation — to be clear and unambiguous in a sort of scientific way while still being evocative. This whole thing is for a very rarefied audience. I don't have to worry about keeping a general audience interested with this, but even within my target audience, people can quickly lose focus when even good information is presented poorly. You have to keep them engaged while still being rigorous. So striking a balance was important and the hardest part. But really sculpting that language has given me the tools to be even more clear about my position when I have to have that discussion again.

Were there other cameras that you wish you could have included in the comparisons or do you feel like you had a good cross section and enough data points in that regard?

In a lot of ways, it doesn’t matter what cameras were used in my comparison. The point is that you do have to test each one. But you have all the information here that says you can’t choose a camera just based on the number of Ks. If you want your image to look resolute — without scaling or cropping or extracting — you can’t just make a decision based on the Ks. But even if you do want to do an extraction, you still can’t choose just based on the number of Ks. People will sometimes say “I want to do my wide shot and my tight shot at the same time” and shoot with the intention of doing an extraction, thinking that the solution to do this is to shoot with the camera that says 8K on it. Well, you still have to test the camera and take those images through your pipeline to see what the result will be. In this presentation, we shot with cameras that range from 3K to 11K, and the highest actual resolving power is in the middle of that range at 6K. The 3K camera is better than some of the 6Ks, and one of the 6Ks is better than the 11K. You just can’t tell what you’re going to get based on counting Ks. And this discussion is completely applicable to cameras not included in the test — like the Panavision DXL or Panasonic VariCam — because we’re not proposing that you make a decision based on this comparison, but for you to make a comparison yourself through a rigorous process that properly controls variables. At a certain point, all the cameras we tested will be obsolete, but the approach will still be true and useful.

You’ll find Yedlin’s earlier presentation, Display Preparation Demo, here and its follow-up, On Color Science, here.